A year can seem unending. For an entire year, Julie Cordua tracked a man who regularly posted new images and videos online of him raping and torturing a very young girl. The predator was smart enough to hide any hints that might reveal his identity. “I felt increasingly angry and helpless,” Cordua says. She only knew about the case because two FBI agents had called her and asked for her help identifying the girl. “And I couldn’t help them, couldn’t do anything to stop the abuse,” Cordua remembers. “This is the type of failure that keeps you up at night, and it’s the type that clarifies our purpose.”

This life-changing case occurred seven years ago. As the CEO of the nonprofit Thorn, Cordua is now at the forefront of helping tech companies and law enforcement curb the online epidemic of children’s sexual abuse materials (CSAM). It took an entire year for the FBI to find and free the girl. Herself a mother of three, Cordua couldn’t get the images out of her mind. “I understood that this girl is not an isolated case,” she says from her office in Los Angeles.

Thorn was founded in 2009 by Ashton Kutcher and Demi Moore, originally under the name DNA. Its goal was to stop sex trafficking by using software to match online ads on infamous sites like backpacker.com with missing children. Cordua, 45, was a marketing expert for Bono’s RED program, fundraising $160 million for his AIDS work in Africa, before becoming CEO of Thorn. The experience with the FBI made it clear to her that the face-recognition technology Thorn had used was not enough, and she became determined to stop CSAM. She refocused Thorn’s mission on developing online tools that can help identify abusers as well as educate law enforcement, tech companies, parents and children. The foundation was renamed Thorn: “Just like thorns protect a rose, and the roses here are our children and their future.”

The foundation claims to “house the first engineering and data-science team focused solely on developing new technologies to combat online child sexual abuse.” Its flagship software program, Spotlight, is available to law enforcement at no cost. According to Thorn, it has reduced the time to find abused kids by 65 percent and identifies an average of nine vulnerable kids per day. Cordua says more than 17,000 children have been identified in the last five years with the help of Thorn’s software, which is now being used in 55 countries.

Cordua confides what is hardest for her is knowing that tech solutions exist but governments and tech companies would have to mount a unified effort to actually implement them. For instance, new tech tools let platforms flag problematic images automatically, eliminating the need for an underpaid worker to watch hours of traumatizing videos. Comparable to a virtual alarm button, these tools could alert platforms or police as soon as an image of a new abuse victim is uploaded so the case can be prioritized and the images prevented from going viral. But so far, most tech companies, from Dropbox to Apple, don’t scan uploads for illegal images. U.S. law requires them to report abuse material immediately, but not to look for it.

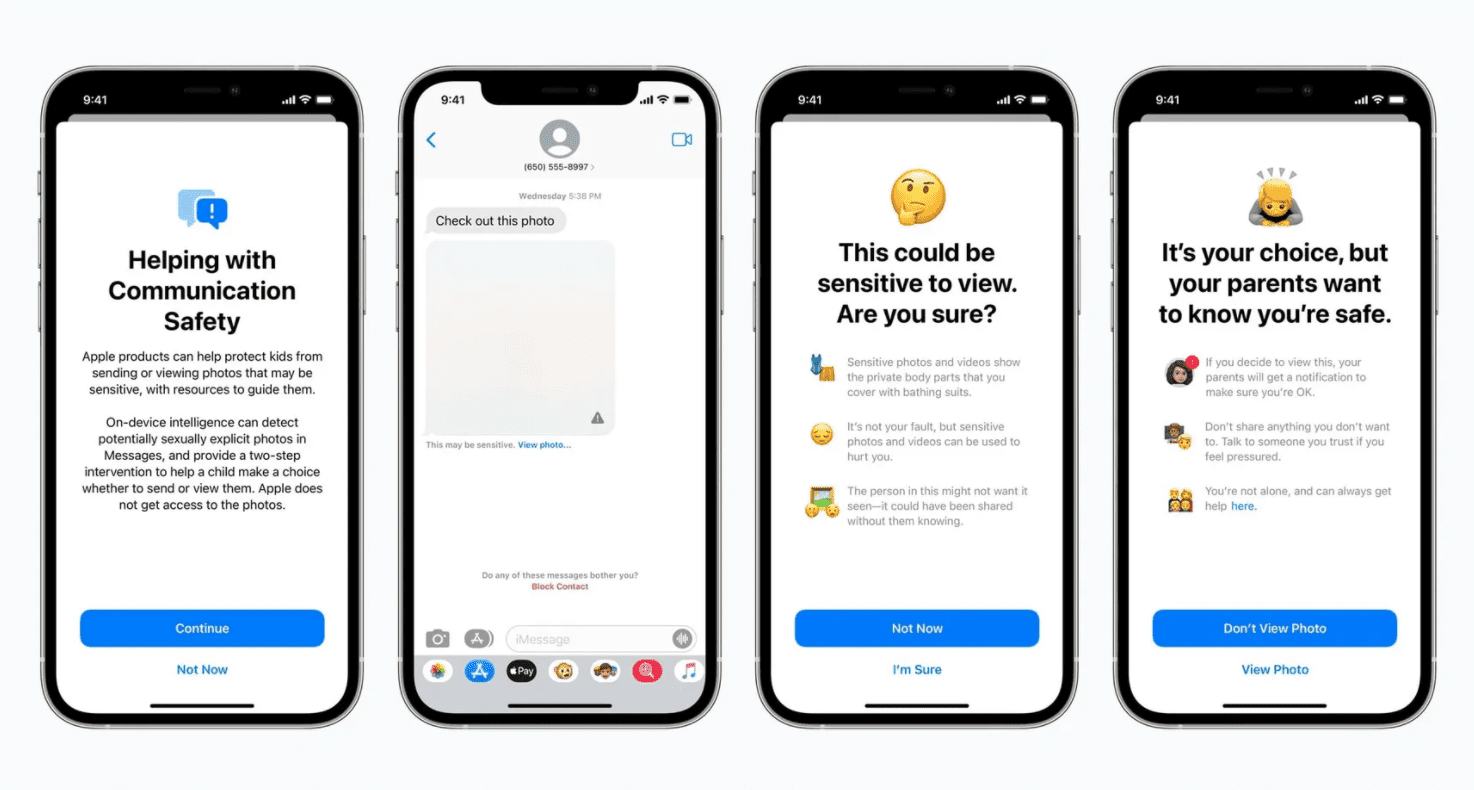

When they do, they often face criticism. This August, when Apple announced updated features to curb the epidemic spread of CSAM, including an upload filter and scanning iCloud photos, a backlash ensued, and Apple postponed the introduction. “Make no mistake,” Princeton professor and computer science expert Jonathan Mayer warned, “Apple is gambling with security, privacy and free speech worldwide.”

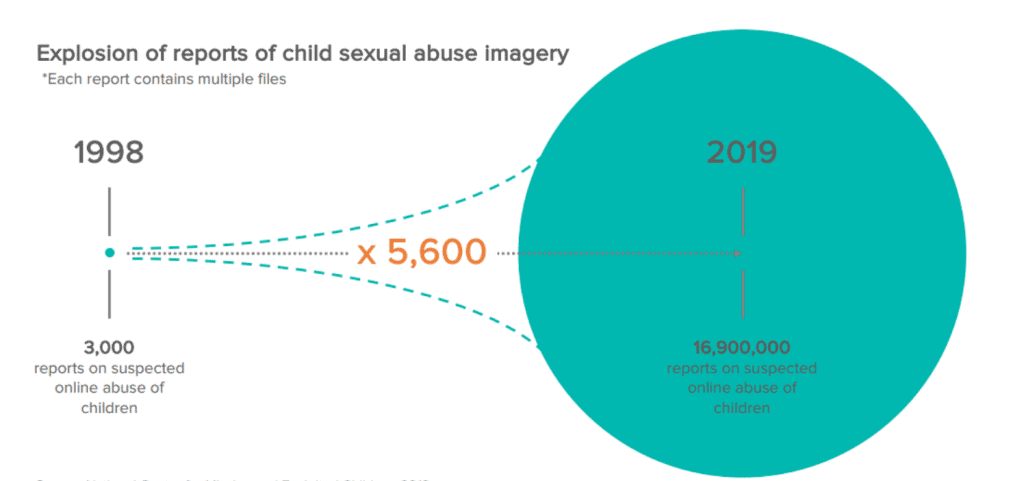

Yet the problem urgently needs addressing. Some 21.7 million reports of CSAM were filed with the National Center of Missing and Exploited Children (NCMEC) last year alone, a 28 percent increase from 2019 (and most reports contain several cases, so this amounts to roughly 80 million cases, and that’s only the ones that get reported). The distribution of CSAM has increased 10,000 percent in the last ten years, and 60 percent of the images show children under 12 years old. “A lot of them are what we refer to as preverbal, meaning they can’t talk, very young children whose abuse and assault and rape is documented on images and videos,” Cordua told the New York Times. Behind every CSAM file is a child in need of support, or a survivor whose trauma continues to spread online for many years after their escape. Since the internet is the most important “market” for predators, that is the place where they have to be beaten, say experts, with tech tools.

I assumed most of the horrific images would be hidden on the dark net. But according to Cordua and other experts, predators have little to fear when they upload abusive content to their cloud on Amazon or Apple. Extensive research by the New York Times shows that “cloud storage services, including those owned by Amazon and Microsoft, do not scan for any of the illegal content at all, while other companies, like Snap, scan for photos but not videos.” Anybody could store brutal abuse images in their digital cloud without worry of being unmasked. But it’s not just the Big Five that avert their eyes. Cordua shares several examples of small businesses that had no idea their platforms were being used to hide CSAM. “When they upload our software, it usually only takes a few hours before the first CSAM are flagged. The owners are always shocked.”

A fragmented system

The distribution of child pornography was nearly eliminated in the 1980s, simply because it became too risky for predators to send the materials by mail. The anonymity of the internet led to an explosion of CSAM, often with online pressure from abusers to upload increasingly graphic images.

So companies use codes called “hash” to identify problematic images automatically, without the need to review each one individually (or peek into users’ private messages). But each platform uses its own system. Facebook and Google, for instance, couldn’t support each other in discovering CSAM because their systems weren’t compatible. They also didn’t have a way to distinguish old images from images of children who were currently being abused, whose rescue needs to be prioritized.

This is where Thorn comes in. “Our first, most basic premise is that all of this data must be connected,” says Cordua. Thorn developed a program called Safer for platforms to sync their codes because CSAM are marketed internationally. Thorn is now working with more than 8,000 companies worldwide, and Safer has helped tech platforms identify over 180,000 CSAM files this year alone. Cordua is hesitant to reveal how the software works because she does not want to tip off abusers.

“We know that discovering how to defend children from sexual abuse while maintaining user privacy is difficult,” Cordua acknowledges. “To be successful we need companies like Apple and many others to continue to collectively turn their innovation and ingenuity to this issue, creating platforms that prioritize both privacy and safety.”

Weighed down by negative news?

Our smart, bright, weekly newsletter is the uplift you’ve been looking for.The EU’s new guidelines for platforms highlight how complex striking this balance can be. In Germany, for instance, platforms currently store data for seven days, which means that even when an abuser’s IP address is identified, most data has already been erased before law enforcement can act. Reiner Becker, a former police officer and head of the nonprofit German Children’s Aid, accuses the platforms of protecting abusers’ identity. “I used to be fiercely against storing data. But when someone has abused children, they have foregone their right to privacy.”

Privacy advocates often disagree. When Google identified an abuser in 2014 through his private emails and alerted law enforcement, the tech community protested. (Google automatically scans emails for keywords, usually for targeting ads.) But Cordua believes abuse victims also have a claim to privacy under this logic. “What about the privacy of the children?” she asks. “We know that it is extremely traumatizing for survivors to know that the images of their abuse are shared online for years and decades.”

Does allowing online monitoring for one crime, child abuse, raise the risk that such surveillance could then creep into other aspects of our online lives? What about depictions of other crimes, like drug use? “Everybody wants to talk about the slippery slopes of ifs, buts, maybes, but the other slippery slope is an absolute that we know about,” Ashton Kutcher recently commented in a New York Times podcast. “And that slippery slope is these kids and the evidence of their rape being syndicated online.” He acknowledges that the software could be used “nefariously,” and that addressing the issue means “walking a tightrope between privacy and morality.”

Watching online abuse continue unabated for more than a year, as Cordua initially had to do, left her with one conviction: “Inaction is not an option. Every child whose sexual abuse continues to be enabled by Apple’s platforms deserves better.”